Unlocking the Secrets: How Does Image Recognition Work in AI? (And Why It’s Changing Everything)

What is Image Recognition in AI?

How Does Image Recognition Work in AI? It enables computers to identify and classify objects, people, places, and actions within digital images or videos. It is a subset of computer vision, which involves various tasks such as image classification, object detection, and scene segmentation. Image recognition uses machine learning models, particularly deep learning algorithms, to analyze visual data and make predictions about the content of images.

How AI Enhances Image Recognition Capabilities

AI enhances image recognition capabilities by using deep learning techniques, such as convolutional neural networks (CNNs), which are particularly effective in processing image data. These neural networks are trained on large datasets of labeled images, allowing them to learn and recognize patterns and features within images. The process involves several layers of analysis, where each layer extracts different features, from simple edges to complex textures and shapes, ultimately enabling the system to identify and classify objects accurately.

Importance of Image Recognition in Today’s World

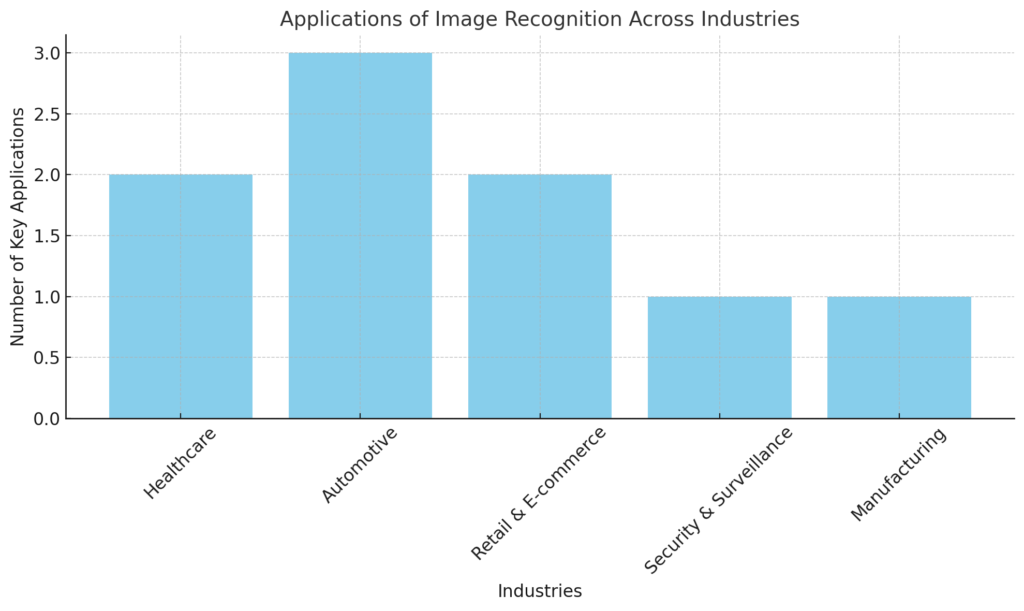

Applications in Various Industries

Image recognition has a wide range of applications across different industries:

- Healthcare: Used for diagnostic purposes, such as identifying tumors or fractures in medical imaging.

- Automotive: Essential for self-driving cars to detect and respond to road signs, pedestrians, and other vehicles.

- Retail and E-commerce: Enhances customer experience through visual search and product recommendations.

- Security and Surveillance: Used in facial recognition systems for security purposes.

- Manufacturing: Helps in quality control by detecting defects in products on assembly lines.

Impact on Business Operations and Daily Life

Image recognition technology significantly impacts business operations by automating tasks that require visual inspection, thus increasing efficiency and reducing human error. In daily life, it is integrated into various applications, such as unlocking smartphones with facial recognition, organizing photos in digital libraries, and enabling augmented reality experiences. The technology also plays a crucial role in enhancing accessibility for visually impaired individuals by providing descriptions of visual content.

Overall, image recognition in AI is a transformative technology that continues to evolve, offering new possibilities and applications across multiple domains. Its ability to mimic human visual perception and process vast amounts of visual data efficiently makes it an indispensable tool in modern society.

The Basics of Image Recognition

Defining Image Recognition

Image recognition is a technology in the field of computer vision that enables machines to identify and categorize objects, people, places, and actions within images or videos. It involves analyzing the visual content and assigning labels to the recognized elements, often using deep learning techniques like convolutional neural networks (CNNs) to process and interpret the data.

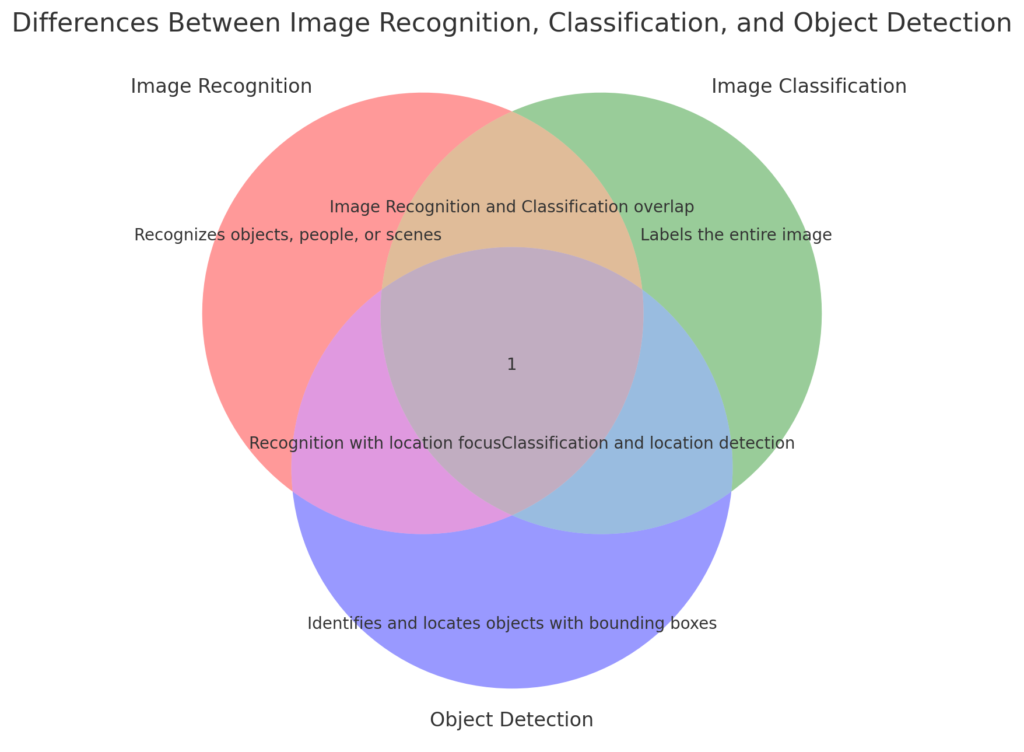

Differences Between Image Recognition, Image Classification, and Object Detection

- Image Recognition: This is a broad term that encompasses identifying and categorizing various elements within an image. It may involve recognizing objects, people, or scenes without necessarily pinpointing their exact locations.

- Image Classification: This task focuses on assigning a single label to an entire image based on its overall content. For instance, an image of a cat will be labeled as “cat” without identifying specific features or locations within the image.

- Object Detection: This involves not only identifying objects within an image but also determining their precise locations. Object detection uses bounding boxes to highlight where each object is situated, making it more complex than simple image recognition or classification.

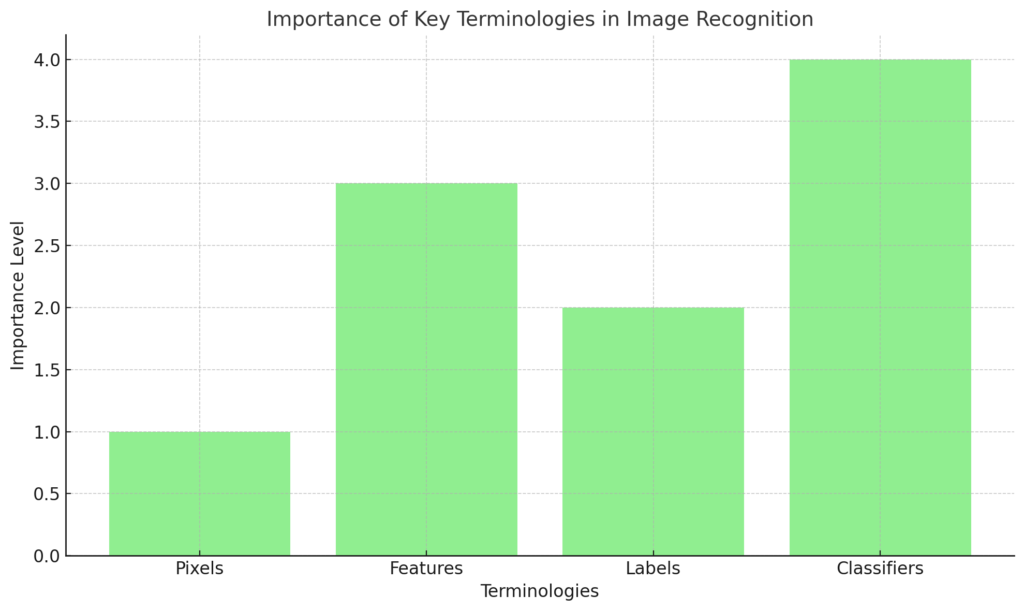

Key Terminologies in Image Recognition

- Pixels: The smallest unit of a digital image, representing a single point in a grid. Each pixel contains information about color and brightness, forming the building blocks of an image.

- Features: These are specific structures or patterns within an image, such as edges, corners, or textures, that are used to identify and classify objects. Feature extraction is a critical step in image recognition, as it involves identifying these distinguishing characteristics.

- Labels: Tags or categories assigned to images or objects within images during the training process. Labels are used to teach models what each object or scene represents.

- Classifiers: Algorithms or models that categorize images based on their features. Classifiers are trained using labeled datasets to recognize patterns and make predictions about new, unlabeled images.

Understanding these fundamental concepts is essential for grasping how image recognition systems are developed and how they function in various applications.

The Science Behind Image Recognition

How the Human Brain Recognizes Images

The human brain processes visual information through a complex system that begins with the eyes capturing light and converting it into electrical signals. These signals travel via the optic nerve to the brain’s visual cortex, where they are interpreted to form images, recognize patterns, and identify objects. This process involves two main pathways: the “What Pathway,” responsible for identifying objects, and the “Where Pathway,” which handles object location and movement.

The brain’s ability to recognize objects rapidly, despite variations in appearance, is termed “core object recognition.” This capability is primarily managed by the inferior temporal cortex (IT), where neurons respond to specific visual stimuli through a largely feedforward process. The visual cortex organizes neurons into patterns, such as ‘pinwheel’ maps, which help in processing different visual angles and segregating complex visuals into distinct neural clusters.

Comparison of Human Vision to AI-Based Image Recognition

AI-based image recognition aims to mimic the human visual system using artificial neural networks, particularly deep learning models like convolutional neural networks (CNNs). These models are trained on large datasets to recognize patterns and classify images based on learned features. While AI can achieve high accuracy and speed in specific tasks, it lacks the universal adaptability and context-based understanding inherent in human vision.

Drawing Parallels Between Human and AI Image Recognition

Similarities in Pattern Recognition and Learning

Both human and AI systems rely on pattern recognition to identify objects. In humans, this involves the gradual transformation and re-representation of visual information along the visual pathways, leading to object recognition in the IT cortex. Similarly, AI models use layers of neural networks to extract features and patterns from images, learning from vast amounts of labeled data to improve accuracy.

Differences in Processing Speed and Accuracy

AI systems often surpass humans in processing speed and can analyze large volumes of data quickly and consistently. However, they lack the nuanced understanding and contextual interpretation that human vision provides. Humans can effortlessly recognize objects in varied contexts and under different conditions, a task that remains challenging for AI without extensive training. Moreover, human vision is influenced by past experiences and expectations, enabling a level of perception that AI systems are yet to fully replicate.

In conclusion, while AI-based image recognition has made significant strides in mimicking human vision, it still operates within the constraints of its training data and lacks the inherent adaptability and contextual awareness of the human brain. However, ongoing advancements in AI continue to bridge this gap, enhancing the capabilities of image recognition systems.

Components of Image Recognition Systems

Input Image

How Images are Captured and Input into the System

Images are captured using various devices such as cameras, smartphones, or scanners. These devices convert the visual scene into digital data, which can then be processed by image recognition systems. The captured images are input into the system in digital formats, typically as arrays of pixel values.

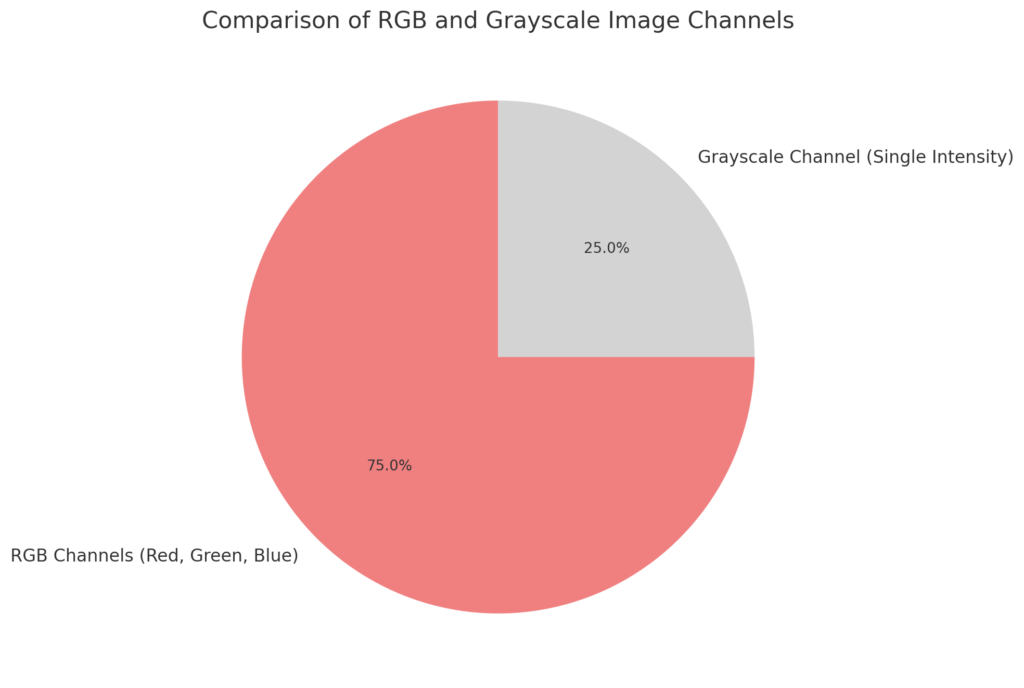

Types of Input Images: RGB, Grayscale, etc.

- RGB Images: These are the most common type of images, composed of three color channels—Red, Green, and Blue. Each pixel in an RGB image is represented by three values corresponding to the intensity of these colors.

- Grayscale Images: These images contain only shades of gray, ranging from black to white. Each pixel is represented by a single intensity value, making them simpler and less data-intensive compared to RGB images.

Feature Extraction

Explanation of Feature Extraction Process

Feature extraction involves identifying and isolating various attributes or patterns within an image that are crucial for recognizing objects. This process reduces the complexity of the image data by transforming it into a set of measurable characteristics, such as edges, textures, or shapes. Techniques like edge detection, corner detection, and texture analysis are commonly used in feature extraction.

Importance of Features in Identifying Objects

Features are critical in distinguishing one object from another within an image. By focusing on specific attributes, image recognition systems can accurately identify and classify objects, even in complex scenes. Effective feature extraction enhances the system’s ability to generalize from training data to new, unseen images.

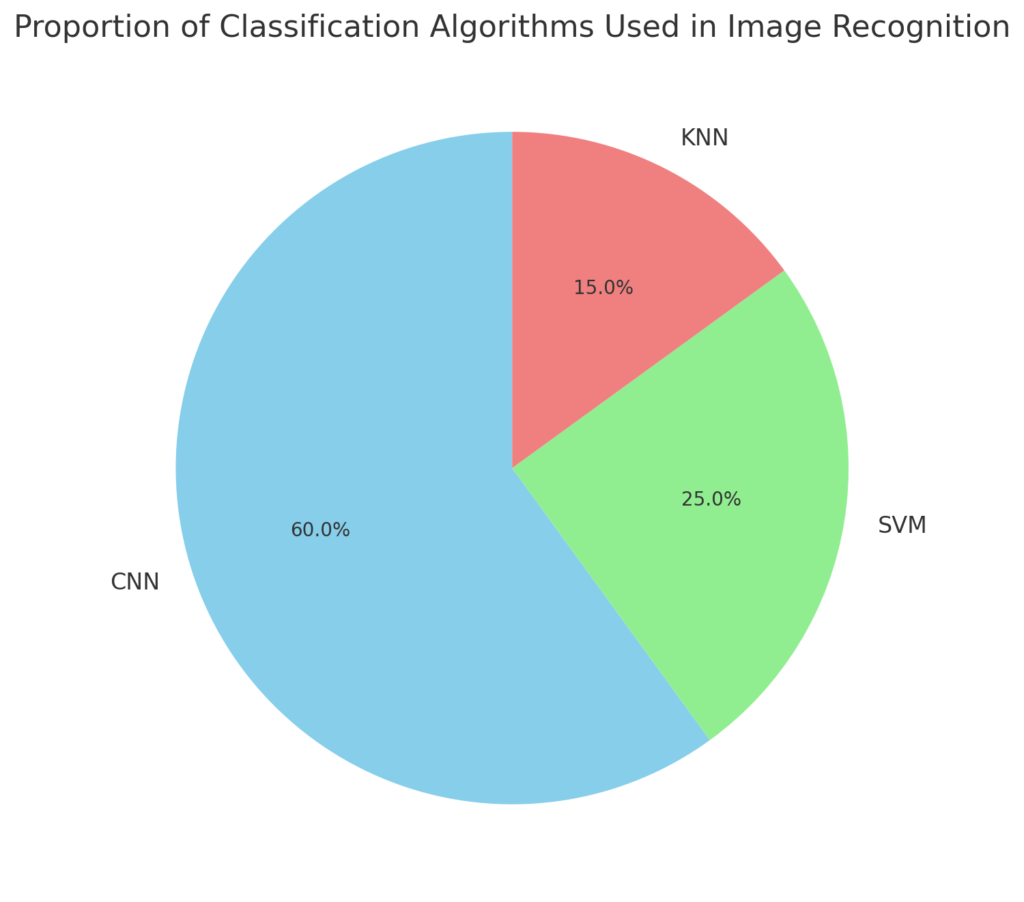

Classification Algorithms

Overview of Different Classification Algorithms Used

- Convolutional Neural Networks (CNNs): These are the most widely used algorithms for image recognition. CNNs automatically learn hierarchical features from images through multiple layers of convolutional operations.

- Support Vector Machines (SVMs): SVMs are used for binary classification tasks and can be applied to image recognition by transforming the image data into a feature space where a hyperplane can separate different classes.

- K-Nearest Neighbors (KNN): This algorithm classifies images based on the majority class among the k-nearest neighbors in the feature space.

How Algorithms Assign Labels to Images

Classification algorithms assign labels to images by analyzing the extracted features and comparing them to known patterns in the training data. For instance, CNNs use the learned weights and biases from the training process to predict the class label that best matches the input image’s features.

Output and Interpretation

How the System Generates and Interprets the Final Result

Once the classification algorithm processes the input image, the system generates an output that typically includes the predicted class label and, in some cases, a confidence score indicating the likelihood of the prediction being correct. The system interprets these results to provide meaningful insights or actions based on the recognized objects.

Examples of Output Formats

- Class Labels: Simple text labels indicating the identified object, such as “cat,” “dog,” or “car.”

- Bounding Boxes: Rectangles drawn around detected objects in object detection tasks, often accompanied by class labels.

- Heatmaps: Visual representations showing the areas of an image that contributed most to the classification decision.

These components work together to enable image recognition systems to process and interpret visual data effectively, driving applications across various domains.

Key Technologies in Image Recognition

Convolutional Neural Networks (CNNs)

Detailed Explanation of CNN Architecture

Convolutional Neural Networks (CNNs) are a class of deep learning models specifically designed to process data with a grid-like topology, such as images. The architecture of a CNN typically consists of three main types of layers: convolutional layers, pooling layers, and fully connected layers.

- Convolutional Layers: These layers apply a set of filters or kernels across the input image to detect various features. Each filter is designed to recognize specific patterns, such as edges or textures, and the result is a feature map that highlights the presence of these patterns in the image.

- Pooling Layers: Also known as downsampling layers, pooling layers reduce the spatial dimensions of the feature maps, which helps decrease the computational load and control overfitting. Common pooling operations include max pooling and average pooling.

- Fully Connected Layers: These layers take the high-level features extracted by the convolutional and pooling layers and use them to classify the image into predefined categories. The fully connected layer connects every neuron in one layer to every neuron in the next layer, allowing for the final decision-making process.

Importance of Convolution Layers, Pooling, and Fully Connected Layers

- Convolution Layers: Essential for feature extraction, allowing the model to learn spatial hierarchies and patterns in the data.

- Pooling Layers: Reduce the dimensionality of feature maps, making the model more efficient and less prone to overfitting.

- Fully Connected Layers: Integrate the extracted features to make final predictions or classifications.

Recurrent Neural Networks (RNNs)

How RNNs are Used in Image Recognition

Recurrent Neural Networks (RNNs) are primarily designed to handle sequential data, but they can be used in image recognition tasks that involve sequences, such as video frame analysis or image captioning. RNNs have a unique architecture that includes loops, allowing them to retain information about previous inputs, which is crucial for understanding sequences.

Differences Between RNNs and CNNs

- Data Type: CNNs are optimized for spatial data like images, while RNNs are designed for sequential data such as text or time series.

- Architecture: CNNs use convolutional and pooling layers to extract features, whereas RNNs have recurrent connections that allow them to maintain a memory of previous inputs.

- Use Cases: CNNs are typically used for tasks like image classification and object detection, while RNNs are used for tasks involving sequences, such as language modeling and video analysis.

Support Vector Machines (SVMs)

Basic Principles of SVMs

Support Vector Machines (SVMs) are supervised learning models used for classification and regression tasks. They work by finding the hyperplane that best separates data points of different classes in a high-dimensional space. The goal is to maximize the margin between the data points of different classes, which helps improve the model’s generalization ability.

How SVMs Classify Images

In image recognition, SVMs classify images by transforming the image data into a feature space and finding the optimal hyperplane that separates different classes. SVMs are particularly effective for binary classification tasks and can be extended to multi-class classification using techniques like one-vs-all or one-vs-one.

Decision Trees

How Decision Trees Work

Decision trees are a type of model that makes decisions based on a series of binary questions about the input data. Each node in the tree represents a decision point, and the branches represent the possible outcomes. The leaves of the tree represent the final classification or decision.

Use Cases of Decision Trees in Image Recognition

While decision trees are not commonly used as standalone models for image recognition due to their simplicity, they can be part of ensemble methods like Random Forests or Gradient Boosting Machines, which can handle complex image data more effectively. These ensemble methods combine multiple decision trees to improve accuracy and robustness in tasks like image classification and object detection.

These key technologies form the backbone of modern image recognition systems, each contributing unique strengths to the field of computer vision.

How AI Enhances Image Recognition

Machine Learning Basics

Overview of Machine Learning and Its Role in Image Recognition

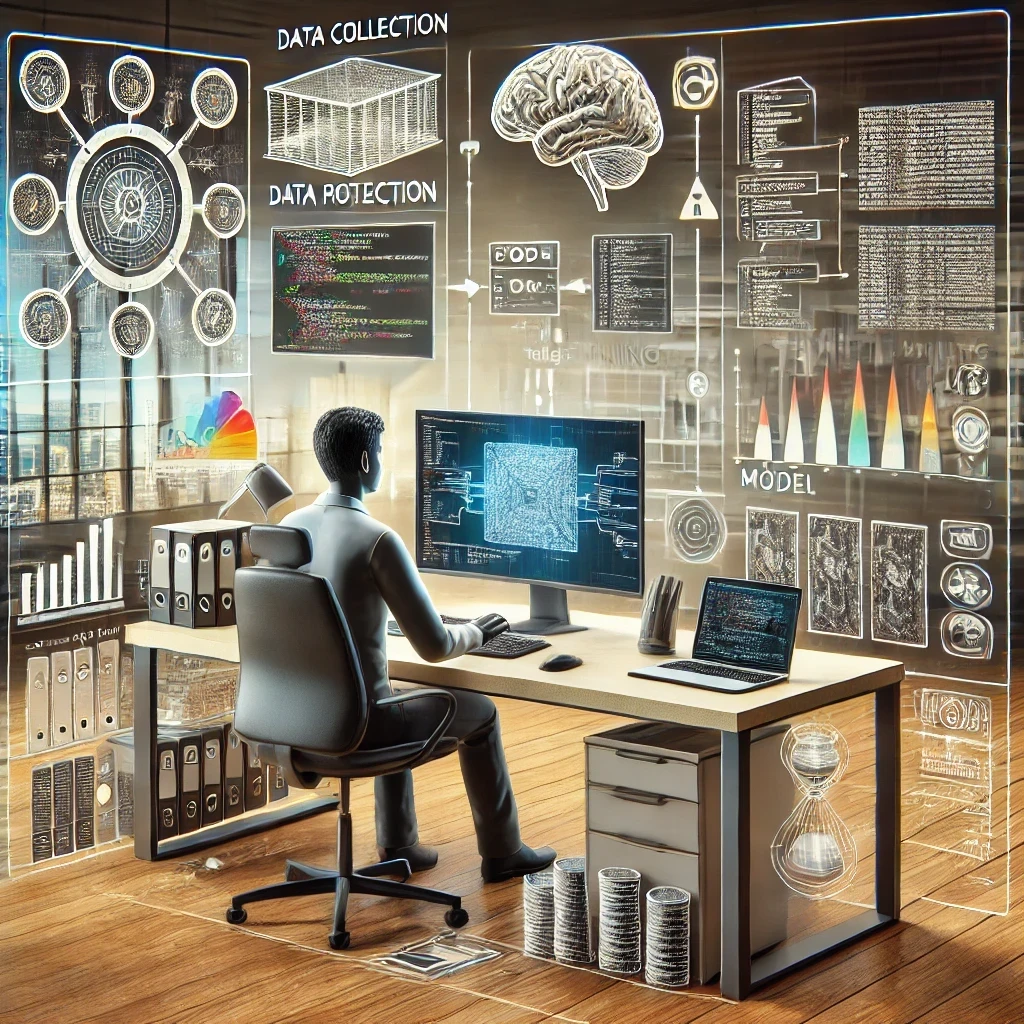

Machine learning (ML) is a subset of artificial intelligence that focuses on building systems that can learn from data and improve their performance over time without being explicitly programmed. In image recognition, ML algorithms are trained on large datasets of labeled images to identify patterns and features, enabling the system to recognize and classify objects within new images. This process involves several steps, including data collection, preparation, model selection, training, testing, and deployment.

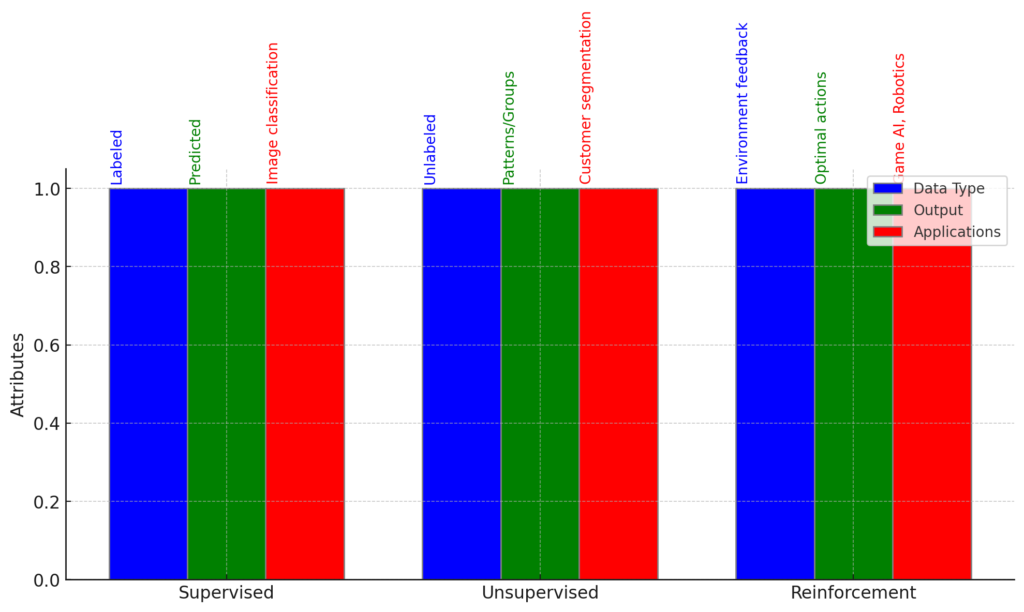

Types of Machine Learning: Supervised, Unsupervised, and Reinforcement Learning

- Supervised Learning: Involves training models on labeled data, where the correct output is known. This is the most common approach in image recognition, used for tasks like classification and regression.

- Unsupervised Learning: Deals with unlabeled data, focusing on discovering hidden patterns or groupings in the data. Techniques like clustering and dimensionality reduction are common in unsupervised learning.

- Reinforcement Learning: Involves training models to make sequences of decisions by rewarding desired behaviors. It is less common in image recognition but can be used in scenarios requiring interaction with an environment, such as robotics.

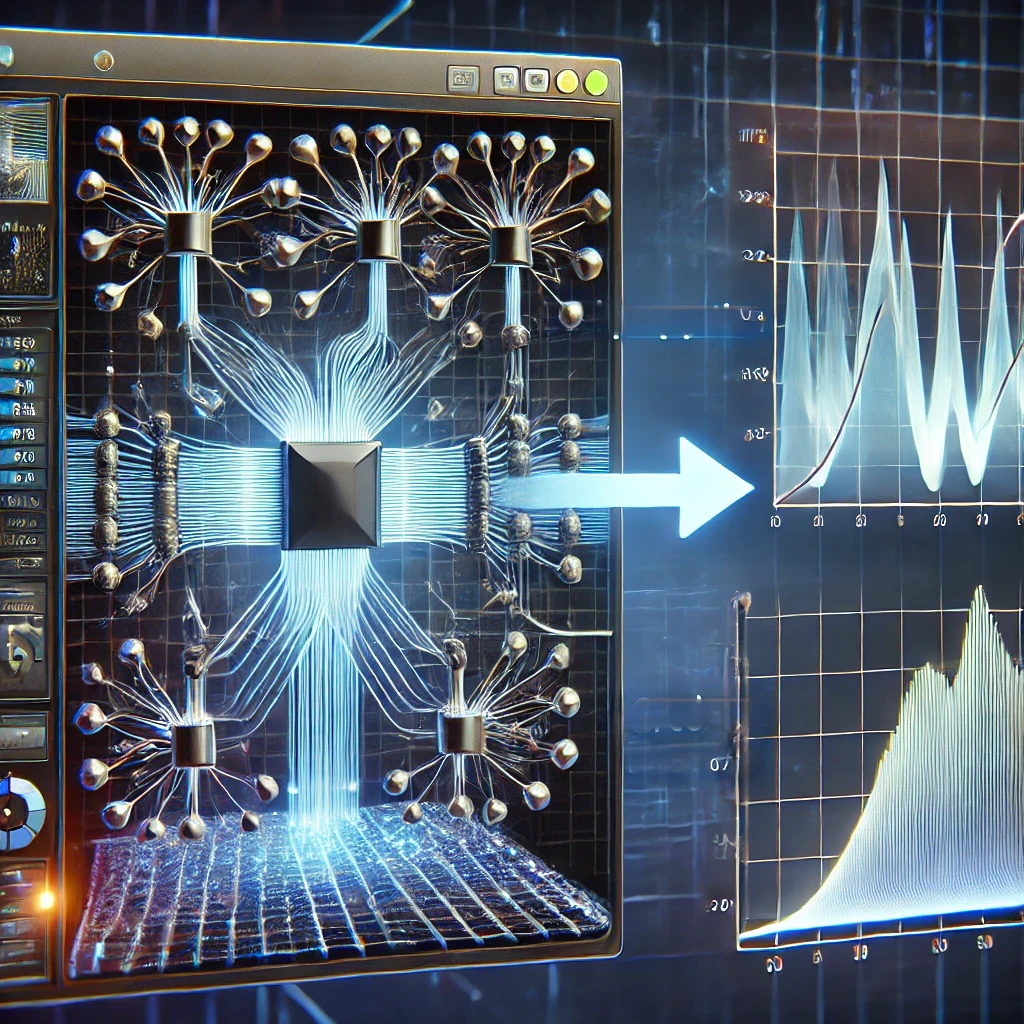

Deep Learning and Neural Networks

How Deep Learning Improves Image Recognition

Deep learning, a subset of machine learning, uses neural networks with multiple layers (deep neural networks) to model complex patterns in data. It excels in image recognition by automatically learning and extracting intricate features from images, improving accuracy and efficiency compared to traditional methods. Deep learning models, particularly convolutional neural networks (CNNs), have revolutionized image recognition by enabling the processing of large-scale and complex image datasets.

Role of Neural Networks in Processing and Recognizing Images

Neural networks, inspired by the human brain, consist of interconnected layers of nodes (neurons) that process input data. In image recognition, CNNs are particularly effective, as they use convolutional layers to detect spatial hierarchies of features, pooling layers to reduce dimensionality, and fully connected layers for classification. This architecture allows CNNs to learn and recognize patterns in images, making them highly effective for tasks such as object detection and image classification.

Backpropagation and Gradient Descent

Explanation of Backpropagation in Training Neural Networks

Backpropagation is a key algorithm used to train neural networks. It involves calculating the gradient of the loss function with respect to each weight in the network, allowing the model to adjust its weights to minimize errors. This process is essential for optimizing the network’s performance and is typically used in conjunction with gradient descent.

How Gradient Descent Optimizes Model Performance

Gradient descent is an optimization algorithm used to minimize the loss function by iteratively adjusting the model’s parameters. It works by moving in the direction of the steepest descent, determined by the gradient, to find the minimum error. The learning rate controls the size of these steps, affecting the speed and stability of convergence. By continuously updating the model’s weights, gradient descent helps improve the accuracy and generalization of neural networks in image recognition tasks.

Overall, AI enhances image recognition by leveraging advanced machine learning techniques, particularly deep learning, to process and interpret visual data with high accuracy and efficiency.

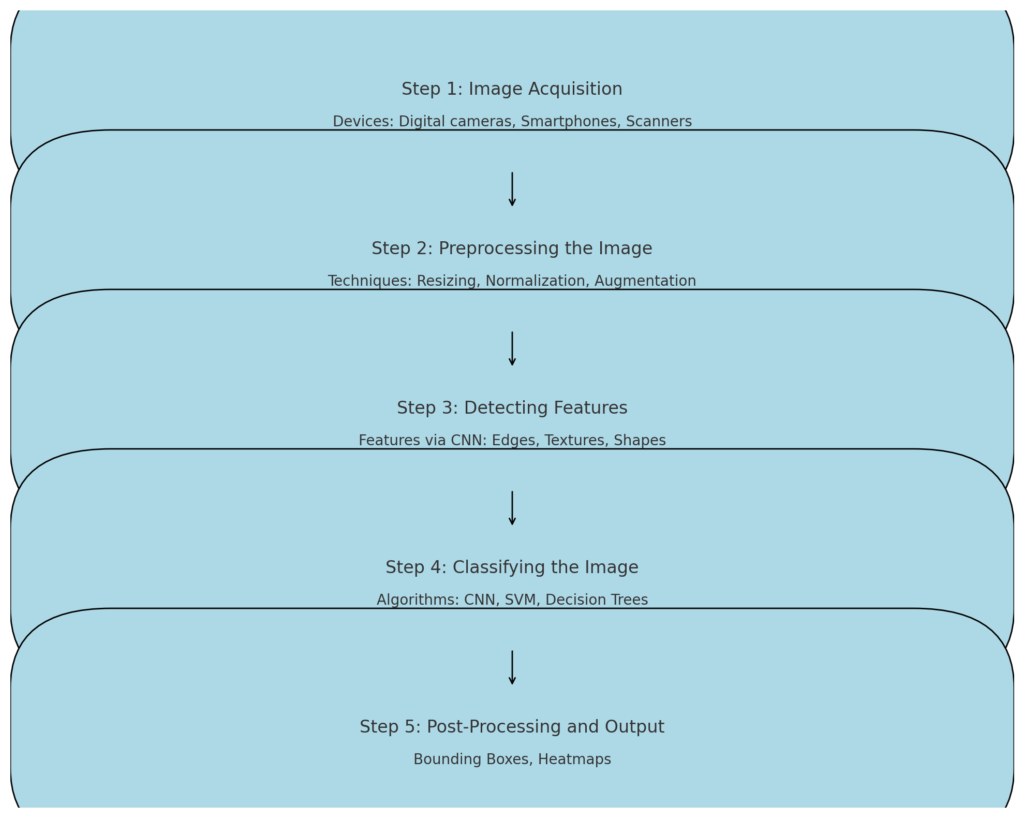

The Process of Image Recognition in AI

Step 1: Image Acquisition

Methods of Capturing Images

Image acquisition involves capturing visual information from the real world and converting it into digital data. This can be done using various devices such as digital cameras, smartphones, scanners, X-ray machines, and LiDAR sensors. Each device uses different methods to detect light and convert it into pixelated data, forming the basis for further processing.

Importance of Image Quality and Resolution

The quality and resolution of the acquired image significantly impact the effectiveness of subsequent image processing and recognition tasks. High-resolution images provide more detail, which is crucial for accurately distinguishing between different structures and features. Poor image quality can lead to inaccuracies in recognition tasks, as it may obscure important details necessary for correct classification.

Step 2: Preprocessing the Image

Techniques for Image Preprocessing

Preprocessing involves preparing the captured images for analysis by enhancing quality and reducing variability. Common techniques include:

- Resizing: Ensures uniformity in image dimensions, which is essential for models that require consistent input sizes.

- Normalization: Scales pixel values to a standard range, typically between 0 and 1, to improve model convergence and performance during training.

- Augmentation: Involves applying transformations such as rotation, flipping, or cropping to increase the diversity of the training dataset and improve model robustness.

Impact of Preprocessing on Model Accuracy

Effective preprocessing can significantly enhance model accuracy by ensuring that the input data is clean, consistent, and in an optimal format for training. It reduces noise, balances the dataset, and helps the model generalize better to new data.

Step 3: Detecting Features

How Features are Detected and Extracted

Feature detection involves identifying significant patterns or attributes within an image, such as edges, textures, or shapes. Convolutional Neural Networks (CNNs) are commonly used for this task, applying filters to the input image to create feature maps that highlight the presence of detected features.

Role of Filters and Convolution Operations

Filters in CNNs are learned during the training process and are crucial for extracting relevant features from the image. Convolution operations apply these filters across the image to generate feature maps, which summarize the presence and strength of features throughout the image.

Step 4: Classifying the Image

Classification Techniques and Algorithms

Once features are extracted, classification algorithms assign labels to the images based on the detected features. Common techniques include:

- Convolutional Neural Networks (CNNs): Use layers of neurons to classify images based on learned features.

- Support Vector Machines (SVMs): Classify images by finding the optimal hyperplane that separates different classes in the feature space.

- Decision Trees: Use a series of binary decisions to classify images, often as part of ensemble methods like Random Forests.

How Models Assign Labels to Images

Models assign labels by comparing the extracted features to known patterns in the training data. The model outputs the class label with the highest probability based on the learned features and classification rules.

Step 5: Post-Processing and Output

Methods for Refining and Interpreting the Output

Post-processing involves refining the model’s output to improve accuracy and interpretability. Techniques include:

- Smoothing: Reduces noise in the output by averaging predictions over multiple runs or using ensemble methods.

- Thresholding: Applies a cutoff to probabilities to decide class membership, enhancing decision confidence.

Examples of Post-Processing in Real-World Applications

In real-world applications, post-processing can involve adjusting the output to fit specific requirements, such as:

- Bounding Boxes: In object detection, drawing boxes around detected objects to indicate their location.

- Heatmaps: Visualizing areas of an image that contributed most to the classification decision, useful in medical imaging for highlighting areas of interest.

These steps collectively form the image recognition process in AI, transforming raw visual data into meaningful insights and actions through advanced computational techniques.

Training Image Recognition Models

Collecting and Labeling Data

Importance of a Large and Diverse Dataset

A large and diverse dataset is crucial for training robust image recognition models. A diverse dataset ensures that the model can generalize well to new, unseen data by exposing it to a wide variety of scenarios, objects, and conditions. This diversity helps prevent overfitting, where the model performs well on training data but poorly on new data. A large dataset provides the volume needed for the model to learn complex patterns and features, improving its accuracy and reliability.

Techniques for Data Labeling and Annotation

Data labeling and annotation involve assigning meaningful labels to images, which are used to train the model. Techniques for labeling include:

- Manual Annotation: Human annotators manually label images, ensuring high accuracy but at a higher cost and time.

- Crowdsourcing: Platforms like Amazon Mechanical Turk allow for distributed human labeling, which can speed up the process.

- Automated Annotation: Using pre-trained models or algorithms to label data automatically, which can be efficient but may require manual verification for accuracy.

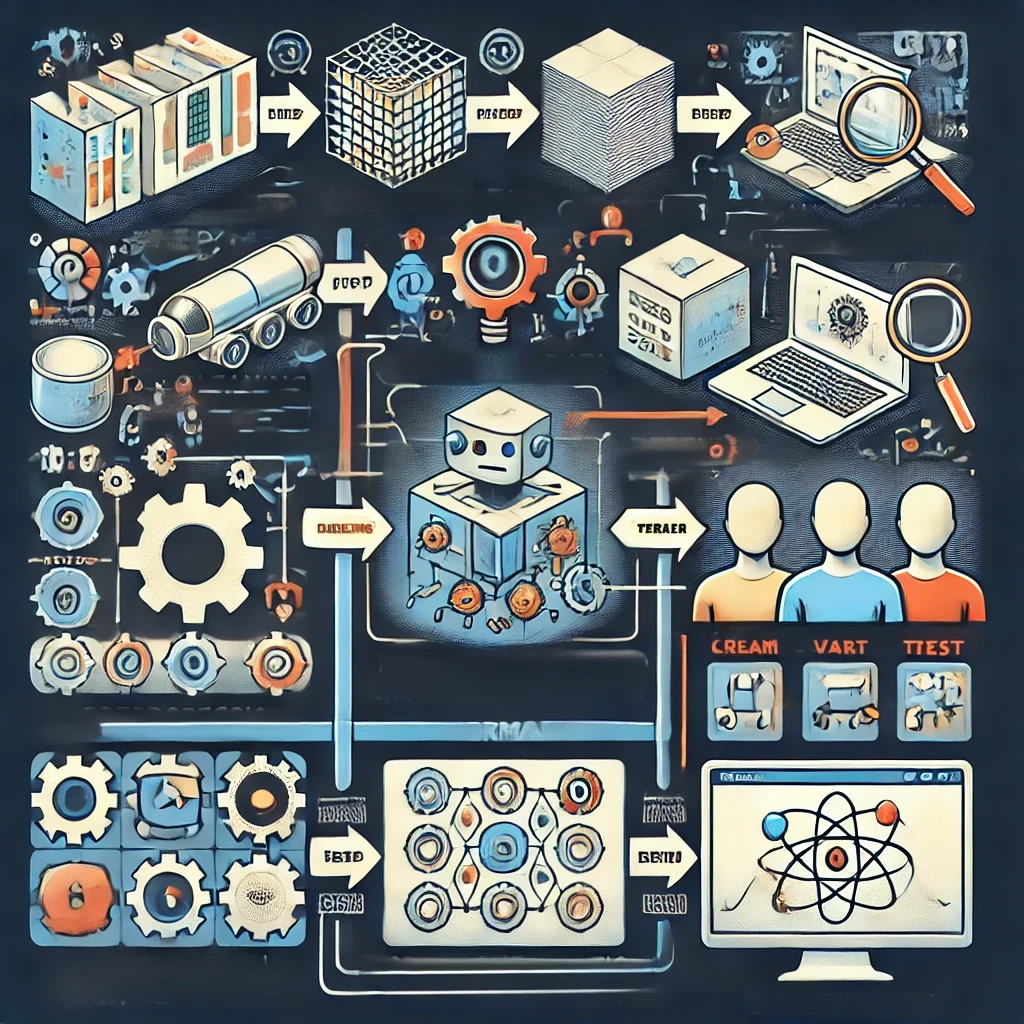

Creating Training and Test Sets

How to Split Data into Training, Validation, and Test Sets

Splitting the dataset into training, validation, and test sets is essential for evaluating model performance:

- Training Set: Used to train the model, typically comprising 60-80% of the total dataset.

- Validation Set: Used to tune hyperparameters and prevent overfitting, usually 10-20% of the dataset.

- Test Set: Used to evaluate the final model’s performance, typically 10-20% of the dataset.

Importance of Proper Data Division

Proper data division ensures that the model is evaluated on unseen data, providing an unbiased assessment of its performance. It helps in detecting overfitting and underfitting, allowing for adjustments to improve the model’s generalization capabilities.

Training Algorithms and Iteration

Overview of the Training Process

The training process involves feeding the training data into the model, allowing it to learn by adjusting its weights and biases through optimization algorithms. This process is iterative, with each iteration refining the model’s predictions.

Importance of Iterations and Epochs in Model Training

- Iterations: Each iteration involves processing a batch of data through the model, updating weights based on the error calculated.

- Epochs: An epoch is one complete pass through the entire training dataset. Multiple epochs allow the model to learn and refine its predictions over time.

The number of iterations and epochs is crucial for finding the right balance between underfitting and overfitting. Too few epochs may result in underfitting, while too many can lead to overfitting. Proper tuning of these parameters is essential for achieving optimal model performance.

Overall, the process of training image recognition models involves careful data preparation, model training, and evaluation to ensure accuracy and generalization across diverse scenarios.

Challenges in Image Recognition

Variability in Image Quality

How Different Quality Levels Affect Recognition Accuracy

The quality of images significantly influences the accuracy of image recognition systems. High-quality images enable models to extract clear and detailed features, leading to better recognition accuracy. Conversely, low-quality images, characterized by noise, low resolution, or poor lighting, can obscure important details, making it difficult for models to accurately identify and classify objects. This can result in misclassifications and decreased model performance.

Techniques to Handle Variability

To manage variability in image quality, several techniques are employed:

- Image Preprocessing: Techniques such as noise reduction, contrast enhancement, and resolution adjustments can improve image quality before feeding it into recognition models.

- Image Quality Recognition (IQR): This involves assessing and enhancing image quality using AI-driven techniques to ensure that only high-quality images are used for training and inference.

- Data Augmentation: By artificially increasing the diversity of the training dataset through transformations like rotation, scaling, and flipping, models can become more robust to variations in image quality.

Handling Occlusions and Distortions

Challenges Posed by Occluded and Distorted Images

Occlusions and distortions present significant challenges in image recognition. Occlusions occur when parts of an object are hidden, while distortions can arise from various factors such as motion blur or noise. These issues can prevent models from correctly identifying objects, as they may lack sufficient visible features for accurate recognition.

Solutions to Improve Model Robustness

- Feature Quantization: This approach enhances the robustness of convolutional neural networks (CNNs) by adjusting feature distributions to mitigate the impact of distortions[4].

- Amodal Perception and Contextual Information: Techniques that infer the presence of occluded parts using visible context can improve detection accuracy under occlusion[5].

- Ensemble Methods: Combining multiple models can help improve robustness against occlusions and distortions by leveraging different model strengths.

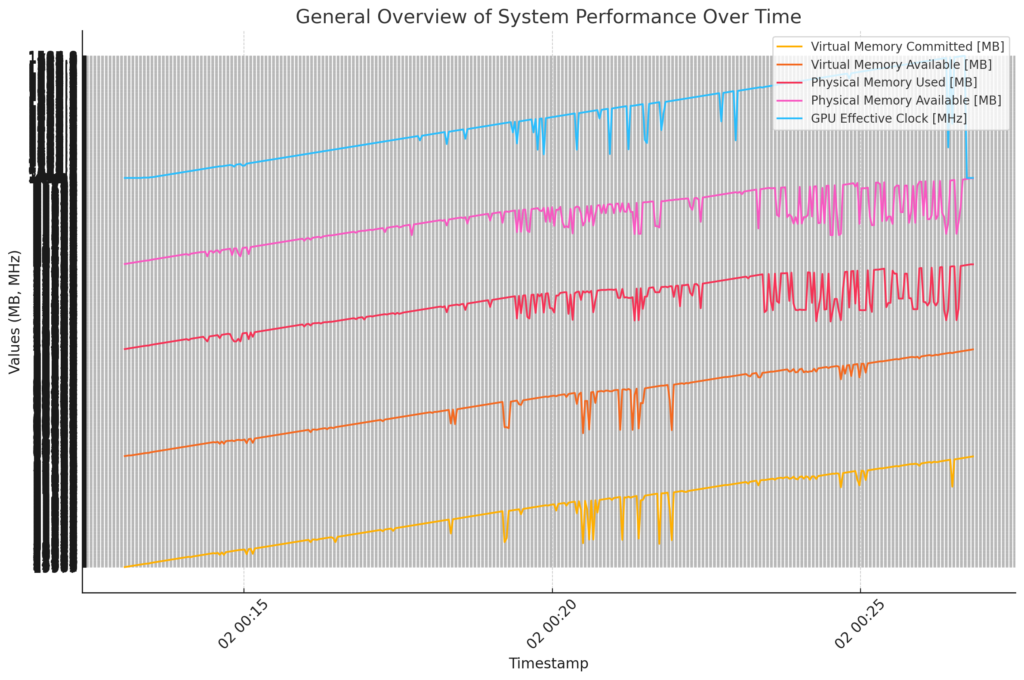

Dealing with Large Datasets

Managing and Processing Large Volumes of Image Data

Handling large datasets is crucial for training effective image recognition models. Large datasets provide diverse examples that help models generalize better, but they also require significant computational resources for storage and processing.

Importance of Computational Resources and Efficient Algorithms

- High-Performance Computing: Utilizing powerful GPUs and distributed computing environments can significantly speed up the training process for large datasets.

- Efficient Algorithms: Algorithms that optimize memory usage and processing speed, such as those using mini-batch gradient descent, are essential for managing large-scale data efficiently.

- Data Management Techniques: Employing strategies like data compression and efficient storage solutions can help manage large datasets without compromising accessibility or quality.

Overall, addressing these challenges involves a combination of advanced preprocessing techniques, robust model architectures, and efficient computational strategies to ensure accurate and reliable image recognition.

Improving Accuracy in Image Recognition

Data Augmentation Techniques

Methods to Increase Dataset Diversity

Data augmentation involves applying various transformations to the existing dataset to artificially increase its size and diversity. Common techniques include:

- Geometric Transformations: Flipping, rotating, cropping, and scaling images to introduce variations in perspective and orientation.

- Color Space Augmentation: Adjusting brightness, contrast, and color channels to simulate different lighting conditions.

- Kernel Filters: Applying blurring or sharpening to enhance image features.

- Random Erasing: Removing parts of an image to make models more robust to occlusions.

These techniques help models learn invariances and improve their ability to generalize to new data.

Impact of Data Augmentation on Model Performance

Data augmentation can significantly enhance model performance by reducing overfitting and improving generalization. By exposing the model to a broader range of scenarios, it becomes more robust to variations in real-world data, leading to improved accuracy and reliability in image recognition tasks.

Transfer Learning

How Transfer Learning Can Enhance Model Accuracy

Transfer learning involves taking a pre-trained model on a large dataset and fine-tuning it on a smaller, task-specific dataset. This approach leverages the learned features from the pre-trained model, which can significantly boost accuracy, especially when data is limited. Transfer learning reduces training time and computational resources while improving performance by building on existing knowledge.

Examples of Pre-Trained Models Used in Transfer Learning

Common pre-trained models used in transfer learning include:

- VGG16 and VGG19: Known for their deep architecture and effectiveness in image classification tasks.

- ResNet: Utilizes residual connections to allow for very deep networks without the vanishing gradient problem.

- Inception (GoogLeNet): Employs a complex architecture with multiple types of convolutional filters to capture diverse features.

These models are typically trained on large datasets like ImageNet and can be adapted to various image recognition tasks.

Hyperparameter Tuning

Importance of Tuning Hyperparameters

Hyperparameter tuning is crucial for optimizing model performance. Hyperparameters, such as learning rate, batch size, and network architecture, significantly influence how a model learns from data. Proper tuning can enhance model accuracy, prevent overfitting, and improve generalization to unseen data.

Techniques for Optimizing Model Performance

Several techniques are used for hyperparameter tuning:

- Grid Search: Exhaustively searches through a specified hyperparameter space, though computationally expensive.

- Random Search: Samples random combinations of hyperparameters, often more efficient than grid search.

- Bayesian Optimization: Uses a probabilistic model to guide the search for optimal hyperparameters, balancing exploration and exploitation.

- Genetic Algorithms: Inspired by natural selection, these algorithms evolve hyperparameter sets over generations to find optimal solutions.

These techniques help identify the best hyperparameter configurations, leading to improved model performance and efficiency.

In summary, improving accuracy in image recognition involves employing data augmentation to enhance dataset diversity, using transfer learning to leverage pre-trained models, and conducting hyperparameter tuning to optimize model performance. These strategies collectively contribute to building more robust and accurate image recognition systems.

Advanced Concepts in Image Recognition

Attention Mechanisms

Explanation of Attention Mechanisms

Attention mechanisms in neural networks are inspired by the human cognitive process of focusing on specific parts of information while ignoring others. In the context of image recognition, attention mechanisms dynamically adjust the focus on different regions of an image, assigning higher importance to areas that are more relevant to the task at hand. This is achieved by computing a weighted sum of input features, where the weights indicate the significance of each feature.

How Attention Improves Image Recognition

Attention mechanisms enhance image recognition by allowing models to concentrate on important features within an image, thereby improving the accuracy and efficiency of processing. This selective focus helps in tasks where certain parts of an image carry more significance, such as object detection and image segmentation. By emphasizing relevant features and downplaying less important ones, attention mechanisms improve the model’s ability to interpret complex visual data.

Transformers in Image Recognition

Role of Transformers in Modern Image Recognition

Transformers, originally developed for natural language processing, have been adapted for image recognition tasks through architectures like Vision Transformers (ViTs). They operate by dividing an image into smaller patches, treating these patches as sequences similar to words in a sentence, and applying self-attention mechanisms to capture relationships between patches. This approach allows transformers to model long-range dependencies and global context within an image, which is particularly beneficial for complex visual tasks.

Advantages of Using Transformers

Transformers offer several advantages in image recognition:

- Global Context Understanding: Unlike CNNs, which focus on local features, transformers can capture global relationships across an entire image, leading to better performance in tasks requiring holistic understanding.

- Parameter Efficiency: Transformers can achieve state-of-the-art accuracy with fewer parameters compared to traditional CNNs, making them more efficient in terms of computational resources.

- Versatility: They can be applied to a wide range of tasks, including image classification, object detection, and segmentation, and are particularly effective in scenarios involving multimodal data, such as combining visual and textual information.

Overall, attention mechanisms and transformers represent significant advancements in image recognition, offering improved accuracy and efficiency by leveraging sophisticated techniques for feature emphasis and global context modeling.

Practical Implementations

Building Your Own Image Recognition Model: A Step-by-Step Guide

1. Define the Problem and Gather Data

- Identify the specific image recognition task (e.g., classification, object detection).

- Collect a large and diverse dataset relevant to your task. Ensure the data is labeled correctly.

2. Preprocess the Data

- Clean the dataset by removing duplicates and correcting any labeling errors.

- Apply data augmentation techniques such as rotation, scaling, and flipping to increase diversity.

- Normalize the images by scaling pixel values to a standard range (e.g., 0 to 1).

3. Split the Data

- Divide the dataset into training, validation, and test sets (e.g., 70% training, 15% validation, 15% test).

4. Choose a Model Architecture

- Select an appropriate model architecture based on your task. For beginners, pre-trained models like VGG16, ResNet, or MobileNet are good starting points.

5. Configure the Model

- Set up the model with the chosen architecture. If using a pre-trained model, decide which layers to fine-tune.

6. Compile the Model

- Choose an optimizer (e.g., Adam, SGD) and a loss function (e.g., categorical crossentropy for classification tasks).

- Set evaluation metrics (e.g., accuracy).

7. Train the Model

- Train the model on the training set while monitoring performance on the validation set.

- Use callbacks like early stopping to prevent overfitting.

8. Evaluate the Model

- Test the model on the test set to assess its performance.

- Analyze metrics such as accuracy, precision, recall, and F1 score.

9. Deploy the Model

- Once satisfied with the model’s performance, deploy it in a production environment.

- Continuously monitor and update the model as needed.

Tips and Best Practices

- Start with a simple model and gradually increase complexity.

- Use transfer learning to leverage pre-trained models for better accuracy and efficiency.

- Regularly evaluate and update the model to maintain performance.

Common Tools and Frameworks: TensorFlow, PyTorch, OpenCV

TensorFlow

Pros:

- Highly scalable and suitable for production environments.

- Extensive community support and documentation.

- Offers TensorFlow Lite for mobile and embedded devices.

Cons:

- Steeper learning curve for beginners.

- Can be more verbose compared to other frameworks.

PyTorch

Pros:

- Intuitive and easy to use, especially for research and prototyping.

- Strong support for dynamic computation graphs, making it flexible.

- Growing community and increasing adoption in academia and industry.

Cons:

- Historically less mature for production deployment compared to TensorFlow, though this is changing with tools like TorchServe.

OpenCV

Pros:

- Comprehensive library for computer vision tasks beyond deep learning, such as image processing and video analysis.

- Lightweight and efficient for real-time applications.

Cons:

- Primarily focused on traditional computer vision techniques, with less emphasis on deep learning compared to TensorFlow and PyTorch.

These tools and frameworks provide a robust foundation for building and deploying image recognition models, each offering unique features and capabilities suited to different stages of development and application needs.

Real-World Applications of Image Recognition

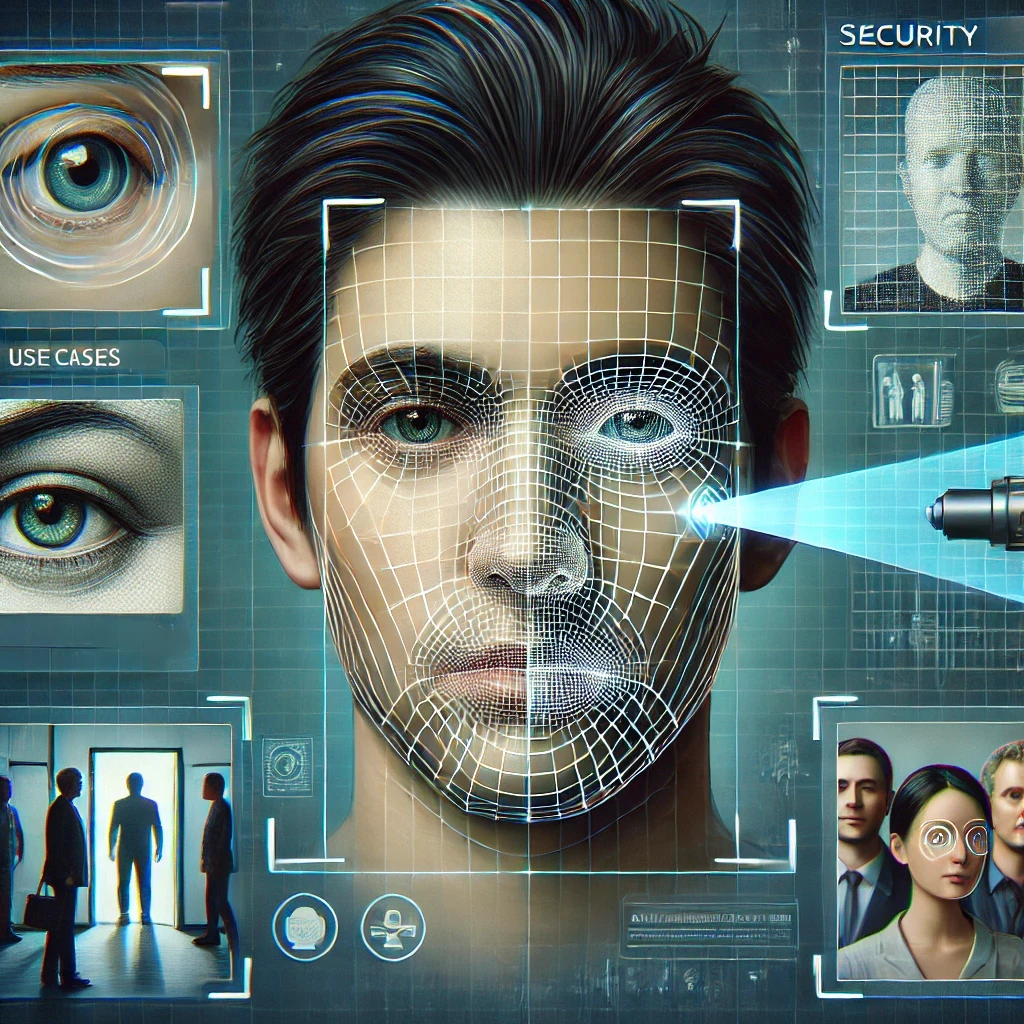

Facial Recognition Technology

Use Cases in Security, Authentication, and Social Media

Facial recognition technology is widely used in various sectors:

- Security: Airports and public spaces use facial recognition for surveillance and security checks, enhancing safety by quickly identifying individuals.

- Authentication: Smartphones, such as those with Apple’s FaceID, use facial recognition for secure and convenient user authentication.

- Social Media: Platforms like Facebook use facial recognition to tag individuals in photos automatically, enhancing user interaction and engagement.

While facial recognition offers significant benefits, it also raises privacy concerns, as it involves collecting and storing sensitive biometric data.

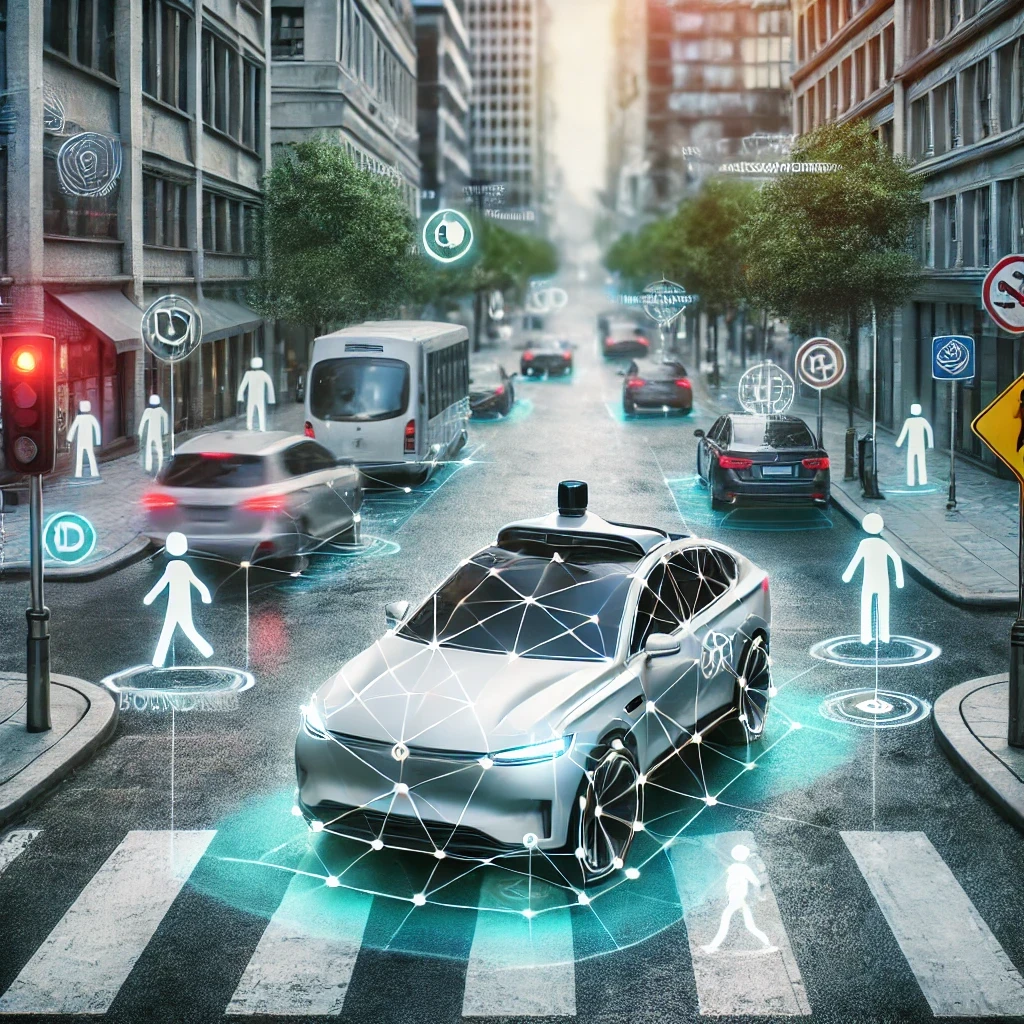

Autonomous Vehicles

Role of Image Recognition in Self-Driving Cars

Image recognition is crucial for autonomous vehicles, enabling them to perceive and interpret their surroundings. Cameras and sensors capture images of the environment, which are then processed to detect and classify objects such as pedestrians, vehicles, and road signs. This information is vital for navigation and decision-making, allowing self-driving cars to operate safely and efficiently in complex environments.

Examples of Image Recognition in Action

- Object Detection: Identifying obstacles and other vehicles on the road.

- Traffic Sign Recognition: Detecting and interpreting traffic signals and signs to comply with road rules.

- Pedestrian Detection: Recognizing pedestrians to prevent accidents and ensure safety.

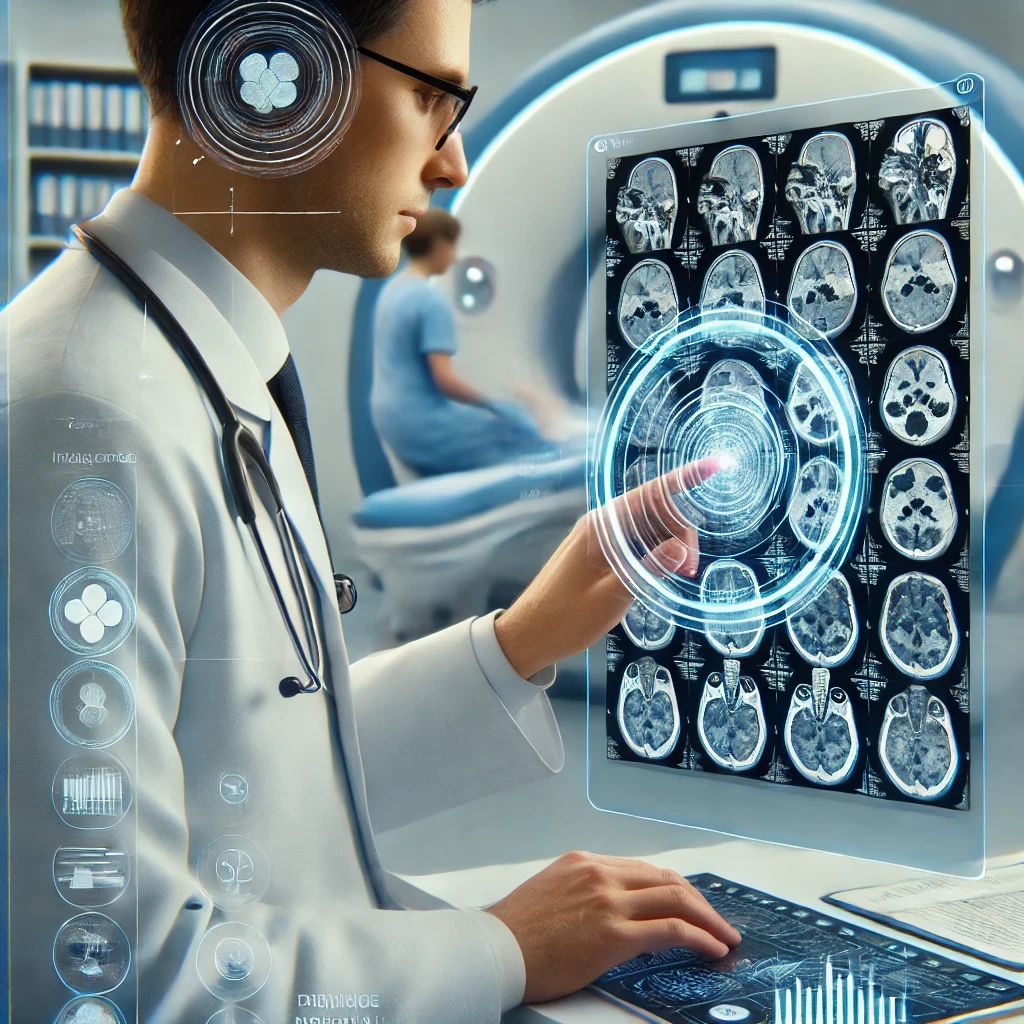

Medical Imaging

Applications in Diagnostics and Treatment

Image recognition plays a transformative role in medical imaging by enhancing diagnostic accuracy and treatment planning:

- Diagnostics: AI algorithms analyze medical images like X-rays, MRIs, and CT scans to detect abnormalities such as tumors, fractures, and infections.

- Treatment Planning: Image segmentation helps delineate organs and tissues, aiding in precise treatment delivery, such as radiation therapy.

Benefits of Image Recognition in Healthcare

- Improved Accuracy: Reduces human error and increases diagnostic consistency.

- Efficiency: Accelerates the analysis process, allowing for quicker patient diagnosis and treatment.

- Innovation: Enables new research opportunities and medical advancements by analyzing large datasets.

Retail and E-commerce

Enhancing Customer Experience and Inventory Management

Image recognition technology is revolutionizing the retail industry by improving customer experience and operational efficiency:

- Smart Shopping Carts: Automatically recognize and tally items, streamlining the checkout process and reducing wait times.

- Customer Verification: Facial recognition for loyalty programs and personalized promotions enhances security and customer satisfaction.

- Smart Shelves: Monitor inventory levels in real-time, ensuring timely restocking and reducing stockouts.

Examples of Image Recognition in Retail

- Visual Search: Allows customers to find products by uploading images, enhancing the shopping experience.

- Product Tagging: Automates inventory categorization, improving searchability and management.

- Smart Mirrors: Provide virtual try-on experiences, increasing customer engagement and sales.

Image recognition continues to drive innovation across various industries, offering significant benefits in terms of efficiency, accuracy, and user experience.

Ethical Considerations in Image Recognition

Privacy Concerns

Potential Privacy Issues with Image Recognition

Image recognition technology, particularly facial recognition, poses significant privacy concerns. The ability to identify individuals in public spaces without their consent raises issues related to surveillance and personal data collection. This can lead to unauthorized data use, profiling, and potential misuse by both private entities and governments.

Strategies to Address Privacy Concerns

To mitigate privacy issues, several strategies can be implemented:

- Regulatory Frameworks: Enforce regulations like the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) to protect personal data and ensure transparency in data usage.

- Anonymization Techniques: Use methods to anonymize data, such as blurring faces or removing identifiable features, to protect individual privacy.

- Consent Mechanisms: Implement systems that require explicit consent from individuals before collecting or using their image data.

Bias and Fairness

Risks of Bias in Image Recognition Models

Bias in image recognition models can result from skewed training datasets that do not adequately represent diverse populations. This can lead to higher error rates for certain demographic groups, particularly minorities and marginalized communities, exacerbating existing social inequalities.

Techniques to Ensure Fairness and Accuracy

- Diverse Datasets: Use balanced and diverse datasets that reflect the entire population to minimize bias.

- Bias Detection and Mitigation: Implement techniques to detect and correct biases during model training, such as fairness-aware algorithms and regular audits.

- Inclusive Development Teams: Involve diverse teams in the development process to bring multiple perspectives and reduce biases in AI systems.

Transparency and Accountability

Importance of Transparency in AI Systems

Transparency in AI systems is crucial for building trust and ensuring ethical use. It involves making the decision-making processes of AI models understandable and accessible to users, which helps in identifying biases and ensuring fairness.

Accountability Measures for Image Recognition Applications

- Explainability: Implement tools that provide insights into how AI models make decisions, allowing users to understand and verify outcomes.

- Auditing and Logging: Regularly audit AI systems to ensure compliance with ethical standards and maintain logs of decisions for accountability.

- Public Oversight: Engage stakeholders, including the public, in the development and deployment of AI systems to ensure they align with societal values and interests.

By addressing these ethical considerations, image recognition technologies can be developed and deployed in a manner that respects privacy, ensures fairness, and maintains transparency and accountability.

Case Studies of Image Recognition in Action

Case Study 1: Healthcare Diagnosis

Detailed Example of Image Recognition in Healthcare

Image recognition technology is revolutionizing healthcare by enhancing diagnostic accuracy and efficiency. AI-powered image recognition is particularly effective in processing medical imagery, such as X-rays, MRIs, and CT scans, to identify various conditions. For instance, it is used for early detection of cancer and tumors, where precision is critical for successful treatment outcomes. Tools like convolutional neural networks (CNNs) are employed to detect abnormalities, segment images into different regions, and classify medical images into categories such as benign or malignant.

Results and Impact on Patient Outcomes

The use of image recognition in healthcare has led to improved diagnostic accuracy and consistency, reducing human error and biases. This technology allows for quicker analysis and handling of large volumes of images, which enhances efficiency and productivity in medical settings. As a result, patient outcomes have improved due to faster and more accurate diagnoses, enabling timely interventions and personalized treatment plans.

Case Study 2: Security and Surveillance

Application in Enhancing Security Measures

Image recognition is a critical component in modern security and surveillance systems. It is used to automatically detect and track objects or individuals within a frame, enhancing the ability to monitor and respond to potential threats. In security applications, facial recognition is employed to identify individuals from a database, aiding in law enforcement and access control. Image processing techniques also enable intrusion detection and anomaly detection, which are essential for maintaining security in sensitive areas.

Success Stories and Challenges

Image recognition technology has successfully enhanced security measures by providing real-time threat assessments and improving the accuracy of surveillance systems. However, challenges remain, such as ensuring the privacy and ethical use of collected data. Additionally, the technology must be continually updated to handle new types of threats and adapt to different environments.

Case Study 3: Retail and Customer Insights

Use of Image Recognition to Gain Customer Insights

In the retail sector, image recognition is used to optimize inventory management, enhance customer experience, and gather valuable data insights. Smart shopping carts equipped with image recognition can automatically recognize items, streamlining the checkout process. Facial recognition can personalize customer service by granting access to loyalty rewards and tailored promotions. Additionally, smart mirrors in stores use image recognition to suggest outfit ideas and provide virtual try-on experiences.

Benefits and Real-World Examples

Image recognition in retail leads to increased operational efficiency by automating routine tasks and reducing manual errors. For example, smart mirrors have been shown to increase customer basket size by up to 20%. Retailers can prevent stockouts and optimize product placement by using image recognition to monitor inventory levels in real-time. These applications not only improve customer satisfaction but also provide retailers with insights to make informed business decisions.

These case studies illustrate the transformative impact of image recognition across various industries, highlighting its potential to improve efficiency, accuracy, and customer experience.

Future Trends in Image Recognition

Integration with Augmented Reality (AR)

Potential of Combining Image Recognition with AR

The integration of image recognition with augmented reality (AR) holds significant potential for creating immersive and interactive experiences. By combining these technologies, AR applications can dynamically overlay digital information onto real-world environments based on the recognition of objects, faces, or scenes. This synergy allows for enhanced user interactions and more intuitive interfaces.

Future Applications and Innovations

- Retail and Shopping: AR can enhance the shopping experience by allowing customers to visualize products in their environment before purchase. Image recognition can identify products and provide additional information or recommendations in real-time.

- Education and Training: AR applications can use image recognition to provide interactive learning experiences, such as identifying historical landmarks or biological specimens and offering detailed explanations.

- Healthcare: AR combined with image recognition can assist in medical training and surgeries by overlaying anatomical information onto a patient’s body, improving precision and outcomes.

Enhanced Personalization in Marketing

How Image Recognition Can Personalize Marketing Efforts

Image recognition technology enables marketers to analyze visual data and tailor marketing strategies to individual preferences. By recognizing products, people, or scenes in images, businesses can deliver personalized content and recommendations to customers, enhancing engagement and satisfaction.

Examples of Successful Personalization Strategies

- Product Recommendations: Retailers use image recognition to analyze customers’ browsing and purchase history, providing personalized product suggestions that align with their interests.

- Targeted Advertising: By identifying objects or environments in user-generated content, brands can create targeted ads that resonate with specific audiences, increasing conversion rates.

- Customer Segmentation: Image recognition helps in segmenting customers based on visual data, allowing for more precise and effective marketing campaigns.

Advancements in Real-Time Processing

Improvements in Real-Time Image Recognition

Real-time image recognition involves the instantaneous analysis and interpretation of visual data as it is captured. Recent advancements have focused on enhancing the speed and accuracy of these processes through efficient algorithms and hardware acceleration.

Potential Impact on Various Industries

- Security and Surveillance: Real-time facial recognition and anomaly detection enhance security measures by providing immediate alerts and responses to potential threats.

- Retail: Real-time image recognition enables features like instant product searches and smart checkout systems, improving customer experience and operational efficiency.

- Healthcare: In medical imaging, real-time analysis allows for quicker diagnoses and treatment planning, improving patient care and outcomes.

These trends highlight the growing importance of image recognition technology across various sectors, driven by advancements in AI and machine learning. As these technologies continue to evolve, they will unlock new possibilities and transform how we interact with the world.

Common Misconceptions About Image Recognition

Myth 1: Image Recognition is Perfect

Addressing Common Misconceptions About Accuracy

A common misconception about image recognition is that it is infallible and can achieve perfect accuracy. In reality, image recognition systems are not flawless and can be susceptible to errors due to various factors such as poor image quality, occlusions, and biases in training data. For instance, algorithmic bias can lead to errors that affect specific groups of people, resulting in unfair outcomes. Additionally, adversarial examples can be used to fool even state-of-the-art models, highlighting the limitations of current image recognition systems.

Realistic Expectations and Limitations

While image recognition technology has made significant advancements, it is important to maintain realistic expectations about its capabilities. Systems can achieve high accuracy under controlled conditions but may struggle with variations in lighting, angles, and backgrounds. Furthermore, the technology is not inherently biased, but biases can arise from the data used to train models. Continuous improvements and diverse datasets are necessary to enhance accuracy and fairness.

Myth 2: AI Image Recognition is Fully Autonomous

Clarifying the Role of Human Oversight

Another misconception is that AI image recognition systems operate completely autonomously without the need for human intervention. In practice, human oversight is crucial to ensure accuracy and safety. For example, in law enforcement, facial recognition technology is used as a tool to generate potential matches, but human analysts make the final identification decisions. Human oversight helps mitigate risks associated with automation bias and ensures that AI systems align with human values.

Examples of Human-in-the-Loop Systems

Human-in-the-loop systems are designed to incorporate human judgment and decision-making into the AI process. In healthcare, AI models assist radiologists by highlighting potential areas of concern in medical images, but the final diagnosis is made by a human expert. Similarly, in security applications, human operators review and verify alerts generated by AI systems to prevent false positives and ensure accurate responses.

By understanding these misconceptions, stakeholders can better appreciate the current state of image recognition technology and the importance of human involvement in its deployment and use.

FAQs About Image Recognition in AI

What is Image Recognition in AI?

Image recognition in AI refers to the process of identifying and categorizing objects and patterns within images using machine learning algorithms. It involves converting images into numerical or symbolic information that can be processed by computers to understand visual content, similar to human vision.

How Does AI Identify Images?

AI identifies images using deep learning models, particularly convolutional neural networks (CNNs), which process image data through layers of interconnected nodes. These models learn to recognize patterns and features, allowing them to classify images accurately based on the training data they have been exposed to.

Is Image Recognition the Same as Computer Vision?

While related, image recognition is a component of computer vision. Computer vision is a broader field that encompasses various tasks aimed at replicating human vision in machines, including image recognition, object detection, and image segmentation.

How Accurate Can Image Recognition Be?

The accuracy of image recognition systems largely depends on the quality of the algorithm and the data it was trained on. In some specific tasks, image recognition systems have achieved accuracy levels comparable to or even surpassing human performance.

Can Image Recognition Work in Real-Time?

Yes, with sufficient computational power and optimized software, image recognition can operate in real-time. This capability is crucial for applications such as autonomous driving and video surveillance, where immediate processing and response are necessary.

Are There Any Privacy Concerns Associated with Image Recognition?

Yes, privacy concerns are significant, especially with technologies like facial recognition. Issues include the collection and use of personal data without consent, potential misuse of the technology, and the risk of false identifications. These concerns necessitate careful consideration of ethical guidelines and regulatory compliance.

What Are Some Common Applications of Image Recognition?

Image recognition has a wide range of applications, including facial recognition, object detection, medical imaging, quality control in manufacturing, augmented reality, and content moderation on social media platforms.

Image recognition in AI has evolved significantly, transforming how machines interpret and understand visual data. This journey has been marked by the development of sophisticated algorithms, particularly deep learning models like convolutional neural networks (CNNs), which have enabled machines to achieve remarkable accuracy in identifying and classifying images. These advancements have led to widespread applications across various sectors, including healthcare, security, retail, and autonomous vehicles, where image recognition enhances efficiency, accuracy, and user experience.

Despite its successes, image recognition technology faces challenges, such as dealing with variability in image quality, addressing biases, and ensuring privacy and ethical use. Efforts to overcome these challenges involve using diverse datasets, implementing human oversight, and developing robust regulatory frameworks.

Looking to the future, image recognition is poised to become even more integral to daily life and industry. The integration of image recognition with augmented reality (AR) promises to create immersive experiences, while advancements in real-time processing will further enhance applications in security, healthcare, and retail. As AI technologies continue to evolve, image recognition will likely become more accurate, efficient, and ethically aligned, driving innovation and shaping the future of human-machine interaction.

Additional Resources

Recommended Books and Articles

Books:

- Computer Vision: Models, Learning, and Inference by Simon J.D. Prince: This book provides a comprehensive overview of the relationships between image data and object classes, making it ideal for those interested in developing functional vision systems.

- Deep Learning for Vision Systems by Mohamed Elgendy: Covers deep learning and computer vision topics, including image classification, object detection, and transfer learning.

- Modern Computer Vision with PyTorch by Yeshwanth Reddy and Kishore Ayyaderava: Offers hands-on solutions to over 50 computer vision problems using PyTorch.

Articles:

- “10 Papers You Should Read to Understand Image Classification in the Deep Learning Era” provides a curated list of influential papers that have shaped the field of image classification.

- “AI Image Recognition – Exploring Limitations and Bias” discusses the limitations and biases in AI image recognition systems and offers insights into improving fairness.

Online Courses and Tutorials

- Class Central: Offers a variety of image classification courses from top universities like Stanford and CU Boulder. These courses cover topics from basic image classification to advanced convolutional neural networks.

- DataCamp: Provides courses on image recognition techniques, including CNNs and deep learning, suitable for both beginners and advanced learners.

Research Papers and Studies

Influential Papers:

- Gradient-based Learning Applied to Document Recognition by Yann LeCun et al.: This foundational paper introduced the LeNet architecture, a precursor to modern CNNs.

- ImageNet Classification with Deep Convolutional Neural Networks by Alex Krizhevsky et al.: Introduced the AlexNet model, which significantly advanced the field of deep learning and image recognition.

- Deep Residual Learning for Image Recognition by Kaiming He et al.: Presented the ResNet architecture, which addressed the vanishing gradient problem and allowed for deeper networks.