Artificial Neural Networks (ANNs): What They Are & How They Work (Beginner Guide)

Artificial Neural Networks (ANNs) are one of the most important building blocks of modern artificial intelligence. They power technologies like image recognition, voice assistants, recommendation systems, and many of the AI tools we use every day.

In this beginner-friendly guide, you’ll learn what artificial neural networks are, how they work, the most common types of neural networks, where they are used in real life, and why they matter for the future of AI.

(Beginner Summary)

- Artificial Neural Networks are inspired by the human brain

- They consist of interconnected nodes called neurons

- ANNs learn patterns from data through training

- They are the foundation of deep learning

- Neural networks power image recognition, speech, and language AI

What Are Artificial Neural Networks?

An Artificial Neural Network (ANN) is a machine learning model designed to recognize patterns by learning from data. It is inspired by the structure and function of the human brain, which processes information through interconnected neurons.

In an ANN, artificial neurons work together to analyze input data, transform it, and produce an output. Over time, the network improves its accuracy by adjusting internal parameters based on feedback.

Artificial neural networks are a core part of machine learning and are especially important in deep learning, a more advanced subset of AI.

How Artificial Neural Networks Work

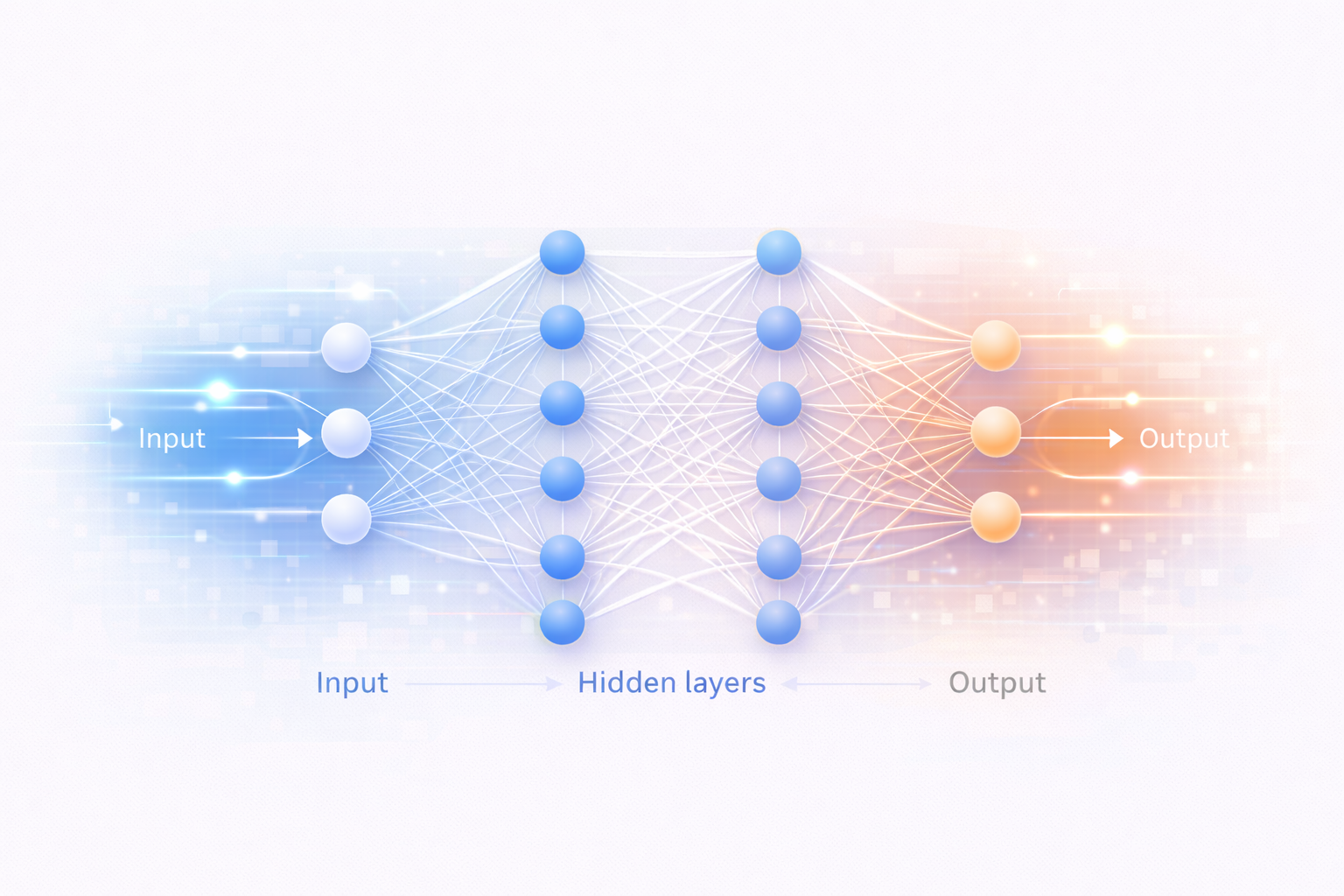

At a high level, neural networks process information in layers. Data flows from the input layer, through one or more hidden layers, and finally to an output layer.

Each layer transforms the data slightly, allowing the network to learn increasingly complex patterns.

Core Components of an ANN

Neurons

A neuron is the basic unit of a neural network. It receives input values, applies a mathematical operation, and produces an output.

Each neuron determines how important a piece of information is before passing it forward.

Layers

Neural networks are organized into layers:

- Input layer: receives raw data

- Hidden layers: process and transform the data

- Output layer: produces the final result

Networks with many hidden layers are called deep neural networks.

Weights and Biases

Weights control how strongly inputs influence a neuron’s output, while biases help adjust results. These values are learned during training.

Training and Backpropagation

Neural networks learn through training. The network makes predictions, compares them to correct answers, and adjusts its weights using a process called backpropagation.

This cycle repeats until the network reaches acceptable accuracy.

Common Types of Neural Networks

There are several types of neural networks, each suited for specific tasks.

Feedforward Neural Networks

Feedforward neural networks are the simplest form of ANN. Data moves in one direction only, from input to output.

They are commonly used for basic classification and prediction tasks.

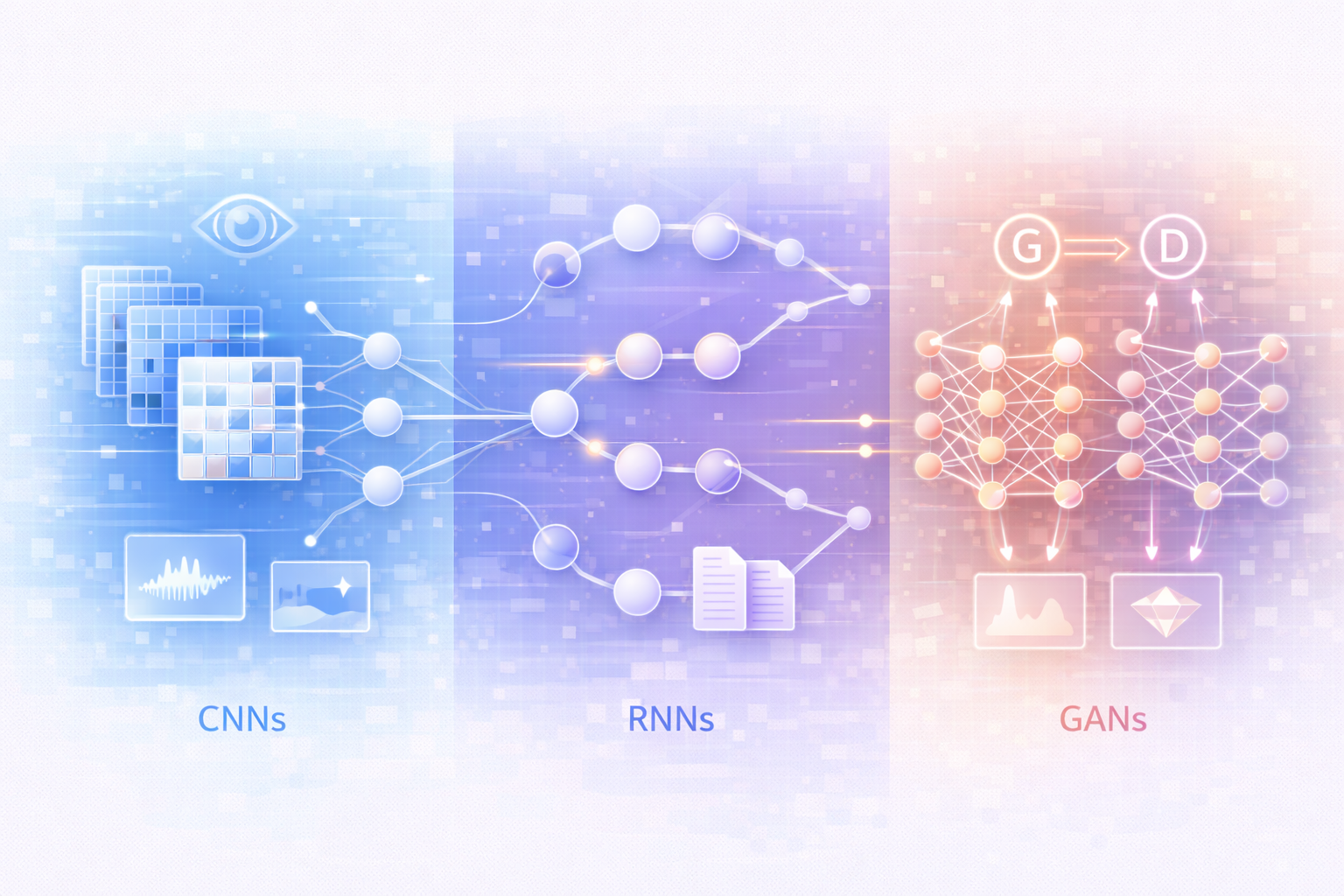

Convolutional Neural Networks (CNNs)

Convolutional Neural Networks are designed to process visual data such as images and videos.

They are widely used in facial recognition, medical imaging, and object detection.

Recurrent Neural Networks (RNNs)

Recurrent Neural Networks are built for sequential data. They can remember previous inputs, making them useful for tasks involving time or order.

Common uses include speech recognition, translation, and text generation.

Other Neural Network Types

- LSTM networks: handle long-term dependencies in sequences

- Autoencoders: used for compression and anomaly detection

- Generative Adversarial Networks (GANs): generate realistic images and content, commonly used in generative AI.

Why Artificial Neural Networks Matter

Artificial neural networks allow machines to solve problems that were once too complex for traditional software.

They enable systems to recognize speech, understand language, identify images, and make accurate predictions.

Real-World Applications of ANNs

Neural networks are used across many industries:

- Healthcare: medical imaging and disease detection

- Finance: fraud detection and risk analysis

- Business: recommendation systems and automation

- Transportation: self-driving technology

- Entertainment: content personalization and creative tools

Challenges and Limitations

Despite their strengths, neural networks have limitations:

- They require large datasets

- Training can be expensive and time-consuming

- Models are often difficult to interpret

- Poor data quality can lead to biased results

Addressing these challenges is an active area of AI research, especially in ethical AI.

The Future of Neural Networks

Neural networks continue to evolve rapidly. Future advancements include:

- More efficient training methods

- Improved transparency and explainability

- Lower energy consumption

- Better integration with other AI systems

Neural networks will remain a core foundation of artificial intelligence.

Frequently Asked Questions

Are neural networks the same as deep learning?

No. Neural networks are the foundation, while deep learning refers to neural networks with many layers.

Do neural networks think like humans?

No. While inspired by the brain, neural networks do not possess consciousness or understanding.

Are neural networks used in generative AI?

Yes. Large neural networks power generative AI systems that create text, images, audio, and video.

Final Thoughts

Artificial neural networks are at the heart of modern artificial intelligence. They allow machines to learn from data, recognize patterns, and solve complex problems.

Understanding ANNs provides a strong foundation for exploring AI, machine learning, and deep learning — and for understanding how intelligent systems are shaping the future.